MLflow

WIP / PLACEHOLDER FILE

Why use MLflow

AI Platfom allows teams to run their notebooks and pipelines using an MLflow plug-in.

MLflow is an open source platform for managing the end-to-end machine learning lifecycle. It tackles four primary functions:

- Tracking experiments to record and compare parameters and results (MLflow Tracking).

- Packaging ML code in a reusable, reproducible form in order to share with other data scientists or transfer to production (MLflow Projects).

- Managing and deploying models from a variety of ML libraries to a variety of model serving and inference platforms (MLflow Models).

- Providing a central model store to collaboratively manage the full lifecycle of an MLflow Model, including model versioning, stage transitions, and annotations (MLflow Model Registry). MLflow is library-agnostic. You can use it with any machine learning library, and in any programming language, since all functions are accessible through a REST API and CLI. For convenience, the project also includes a Python API, R API, and Java API.

In the following sections, you can find instructions for using MLflow in AI Platform.

MLflow server setup

To automatically set up your own MLfLow server, follow these steps:

-

Create a storage account in the Azure Portal. After creating the account, collect the following values:

- Azure Storage Account

- Container Name

- Azure Storage key

If the storage account is new, generate the key in the Azure Portal by going to Storage account > Security + networking > Access keys. Copy key and storage account name for use in the following steps.

-

Go to the AI Platform dashboard.

-

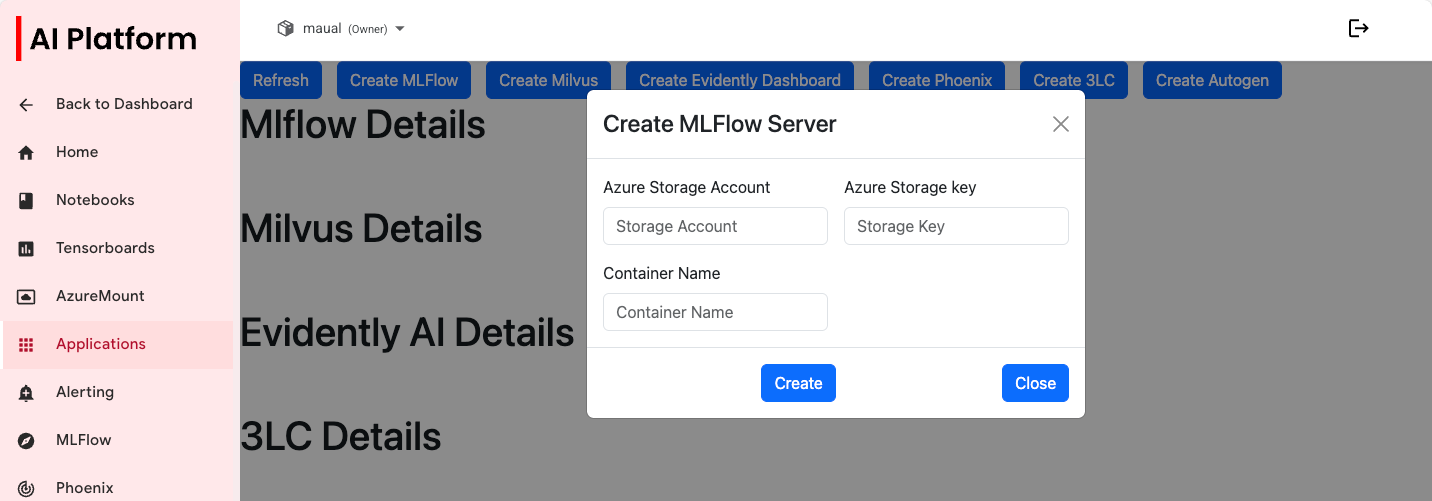

In the side menu, go to Applications and then do the following:

-

Select Create MLflow to open the settings dialog box.

Create MLflow server settings dialog box.

Create MLflow server settings dialog box. -

Enter the corresponding values.

-

Click Create. This will spin up the relevant resources in the background for you.

-

Click Refresh.

infoYou will get information relevant to your MLflow server. This includes the internal URL which will be used when you track experiments, as well as the external URL, which directs you to the UI. This UI can also now be seen in the MLflow tab on the left-hand side menu.

-

View MLflow tracking UI in browser

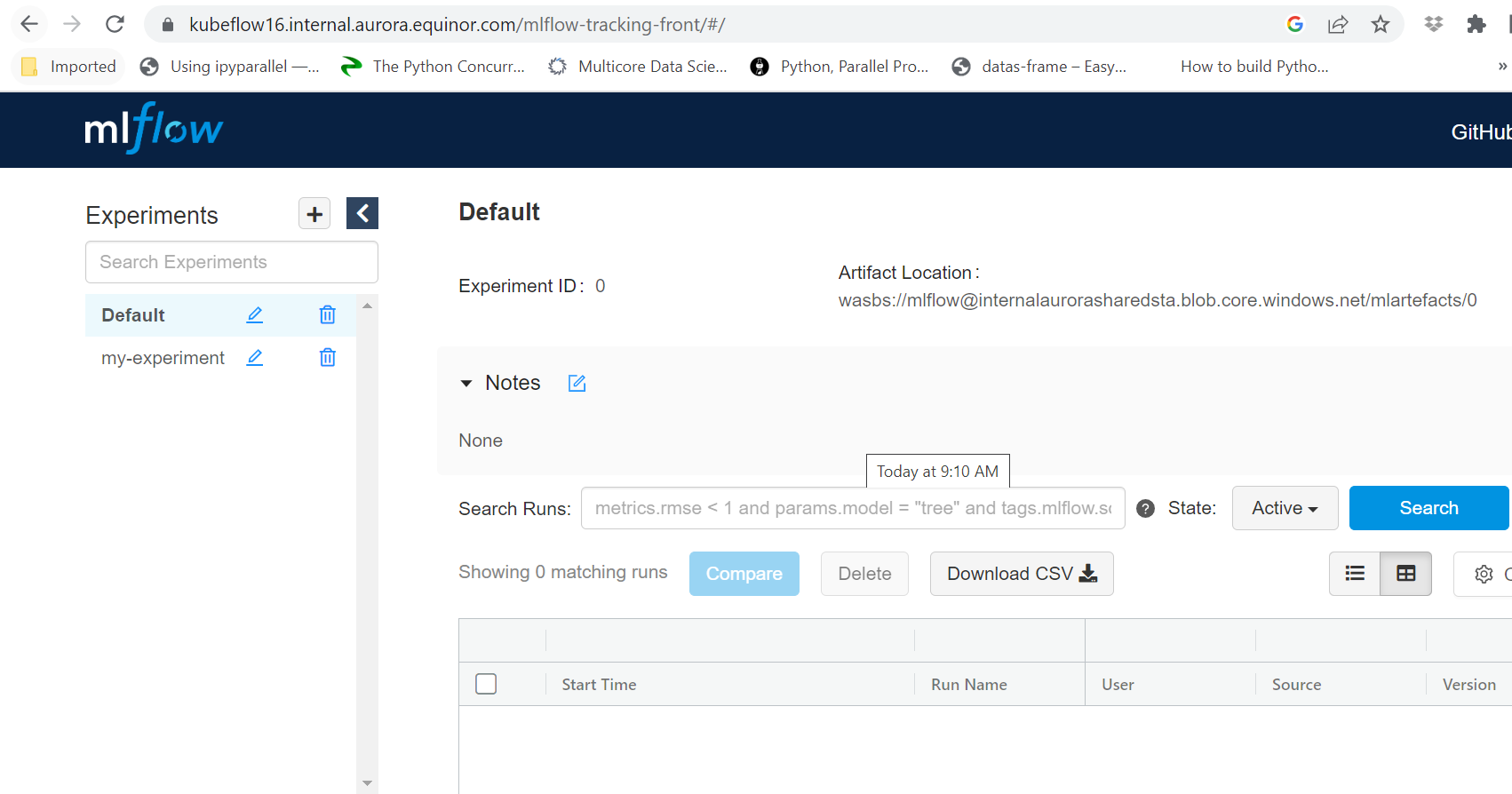

In your browser, replace the placeholders and go to the following URL:

https://{Cluster_Domain}/mlflow-tracking-front-{project_name}/

For example: https://kubeflow16.internal.aurora.equinor.com/mlflow-tracking-front/

You should see MLflow Tracking UI in the browser:

MLflow tracking UI

MLflow tracking UI

Track ML experiments using MLflow

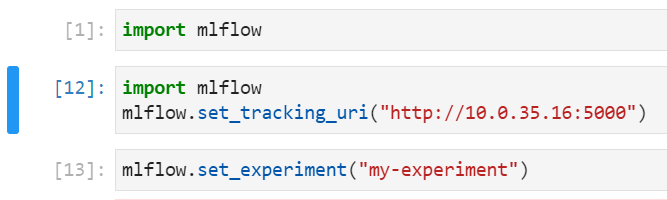

- In your

MLflowExperimentDemo.ipynbnotebook, set the following:- Tracking URI: use the IP address and port values displayed in the Applications > MLflow section of the AI Platform dashboard.

- Create/Set an Experiment: change name

Tracking URI and experiment in notebook

Tracking URI and experiment in notebook

-

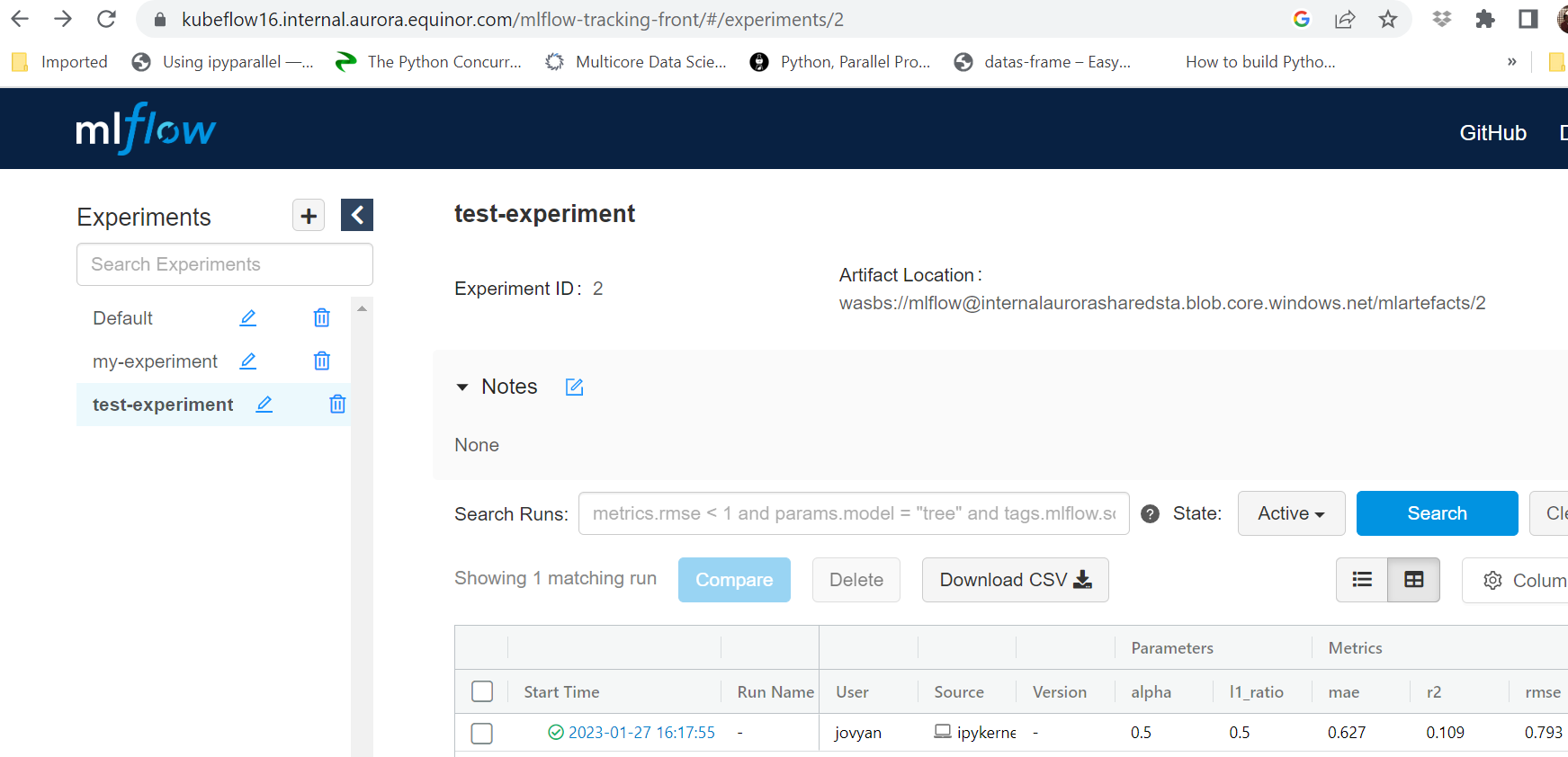

Run your

MLflowExperimentDemo.ipynbnotebook.After running the experiment, you can see the results of your experiment on MLflow UI.

Experiments in MLflowBest practice

Experiments in MLflowBest practiceRunning the experiment directly from the notebook will not track the GitHub repository commit version to the experiment. This is not a recommended approach for proper experimentation tracking. Rather, you may use this method if you are testing temporarily from notebooks without lineage to a GitHub code version.

Go through the following steps if you want to properly track experiments while logging GitHub branch commit versions on the runs.

-

Commit all your latest changes to the GitHub repository to ensure that the GitHub code version matches with experiments and runs are being tracked.

-

From the terminal, use the following command (defined in the

MLprojectfile):python MLflowExperimentDemo.py

Log artifacts/logs (files/directory) using MLflow

-

In

MLflowArtifactTrackingDemo.ipynbandMLflowLogTrackingDemo.ipynb, set the following:- Tracking URI: use the IP address and port values obtained previously

- Create/Set an Experiment: change name

-

Run your

MLflowArtifactTrackingDemo.ipynbandMLflowLogTrackingDemo.ipynbnotebooks. After running the notebooks, you can view the artifacts on MLflow UI. -

To log GitHub commit code versions properly, you need to generate a Python script from the notebook and specify to run that as an entrypoint in the

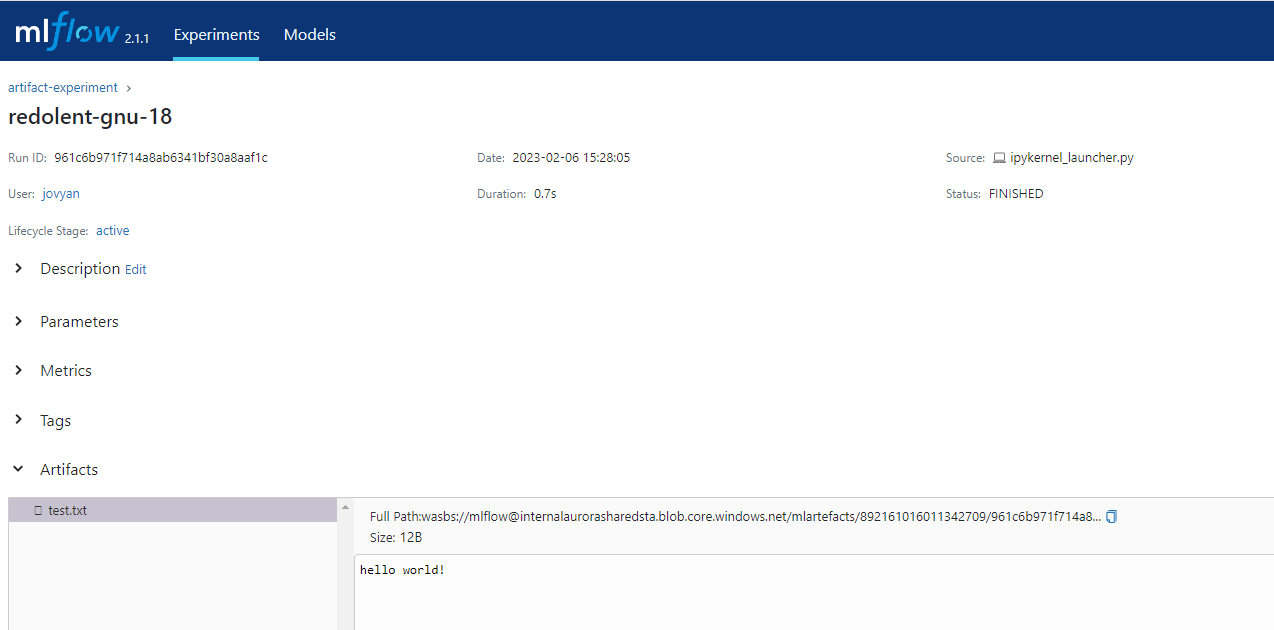

MLprojectfile. Then, you may run the script using that command from the terminal. Artifacts in MLflow experiments

Artifacts in MLflow experiments

Log and register Scikit-learn ML model using MLflow

-

In

MLflowModelRegistrationDemo.ipynb, set the following:- Tracking URI: use the IP address and port values obtained previously

- Create/Set an Experiment: change name

-

Run the

MLflowModelRegistrationDemo.ipynbnotebook. After running the notebook, you can view the scikit-learn model saved and registered on MLflow UI. -

To log GitHub commit code versions properly, you need to generate a Python script from the notebook and specify to run that as an entrypoint in the

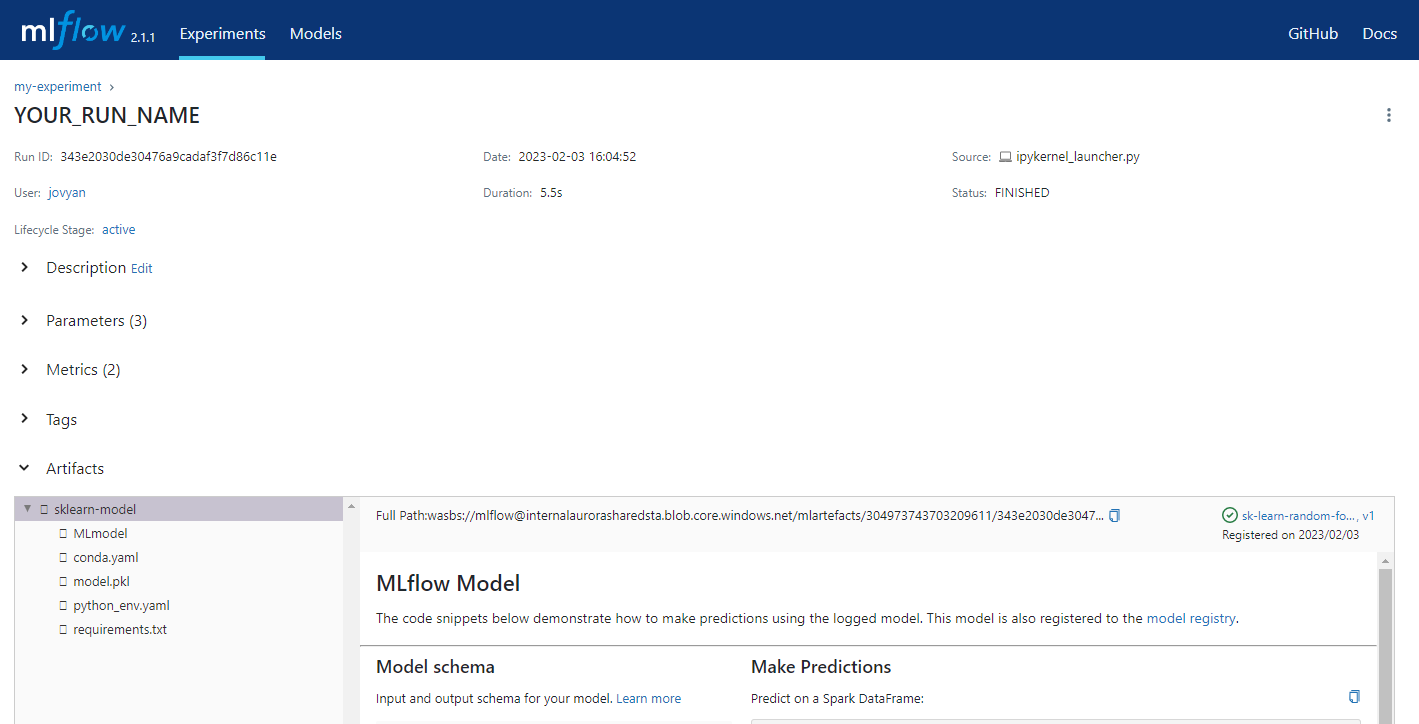

MLprojectfile. Then, you may run the script using that command from the terminal. Artifacts in MLflow experiments

Artifacts in MLflow experiments

Adding labels and tags to your experiments and models

For an example that covers experiment tracking, model registration, and tagging, run the following notebook: